Dynamic images with Vercel OG

Dynamic, data-driven images and media are useful for digital experiences of all kinds. In this post, I'll have a look at one application and technology solution in particular: using Vercel's OG library to generate dynamic, branded Opengraph images for social image previews.

Short version: take an image, such as the one at the top of this page (from a family road trip), pipe it into Vercel's OG service with some data parameters, merge it with its template, and it generates a custom image that the application can expose as needed. The output, in this case, looks like this:

I created a fictional brand to showcase this application — with a logo, custom typeface, colors, and graphic flourishes to illustrate things a brand needs in their dynamic images. This takes the solution beyond two other approaches we see commonly in digital experience stacks: simple compositing (layering one image over the other) and multi-modal generative AI approaches, which at the moment, are abysmal at typography and staying on-brand.

There have been a variety of tools over the years to do this kind of dynamic image generation; 24 years ago we might have used Adobe Flash Generator (which actually wasn't bad but cost tens of thousands and was super slow). Or, scripting Photoshop, using ImageMagick on PHP, or these days, using Puppeteer to capture web templates.

Some of these methods are still in use but have different trade-offs. Vercel tried to emphasize speed with their OG library:

Our social card generation previously used a compressed Chromium release to fit inside the 50mb Serverless Function limit. Due to the size of Chromium, images could take up to 5 seconds to generate, making sharing links feel slow. With Vercel OG, images render almost immediately. — Ben Schwarz

Solution Process

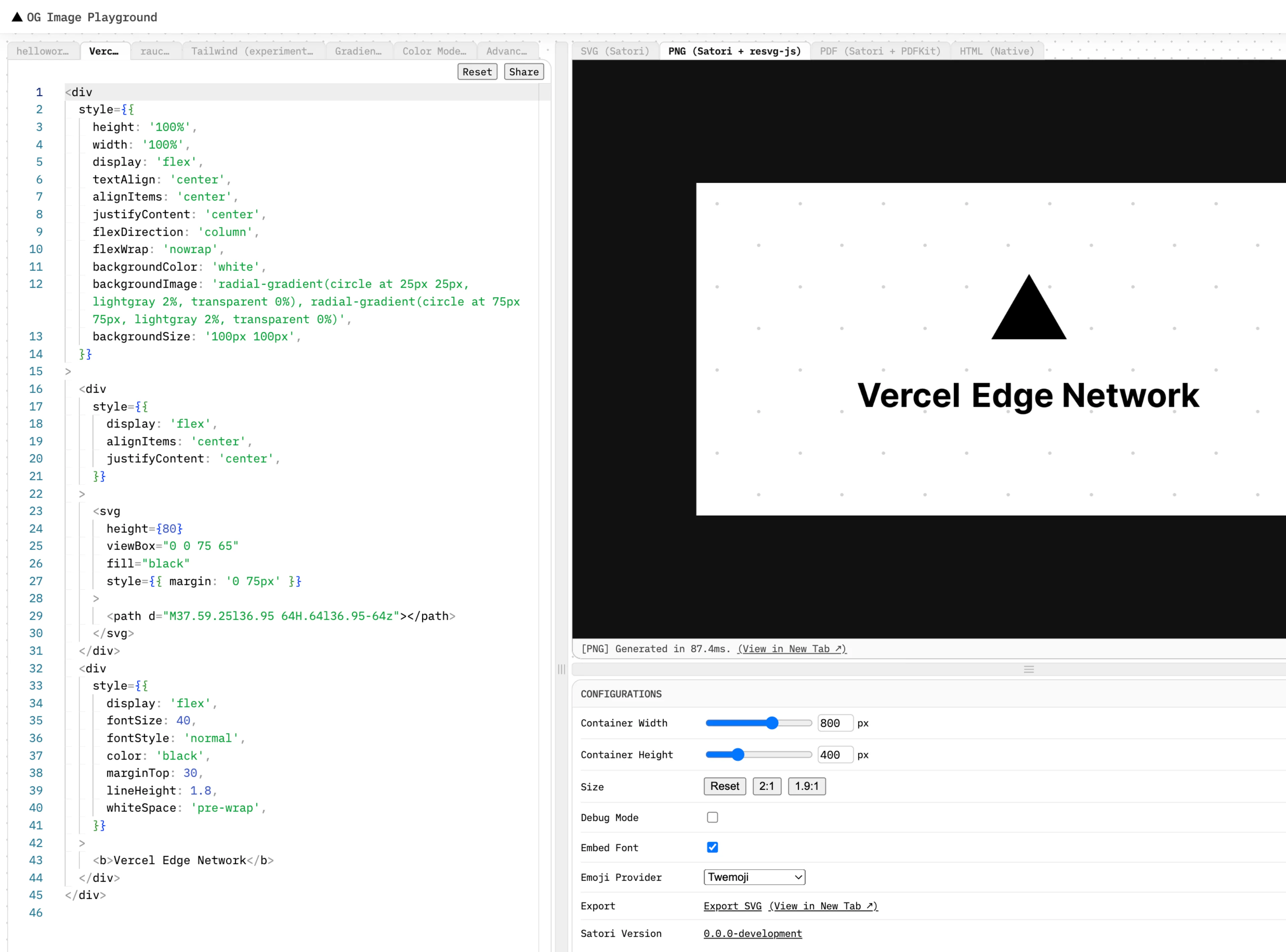

I started the design process in Figma, so I could think through what I wanted quickly. Figma's Dev Mode can be useful here, as the layout engine is sort of its own tool within the web application (this could be improved upon to use design tokens, but that's a future exercise.

To create the opengraph images on demand, several things need to happen:

Setting up the custom route in the Next app, detailed here, such as

/app/api/og/route.tsxCheck out my repo to see how I built this layout, starting from the above instructions.

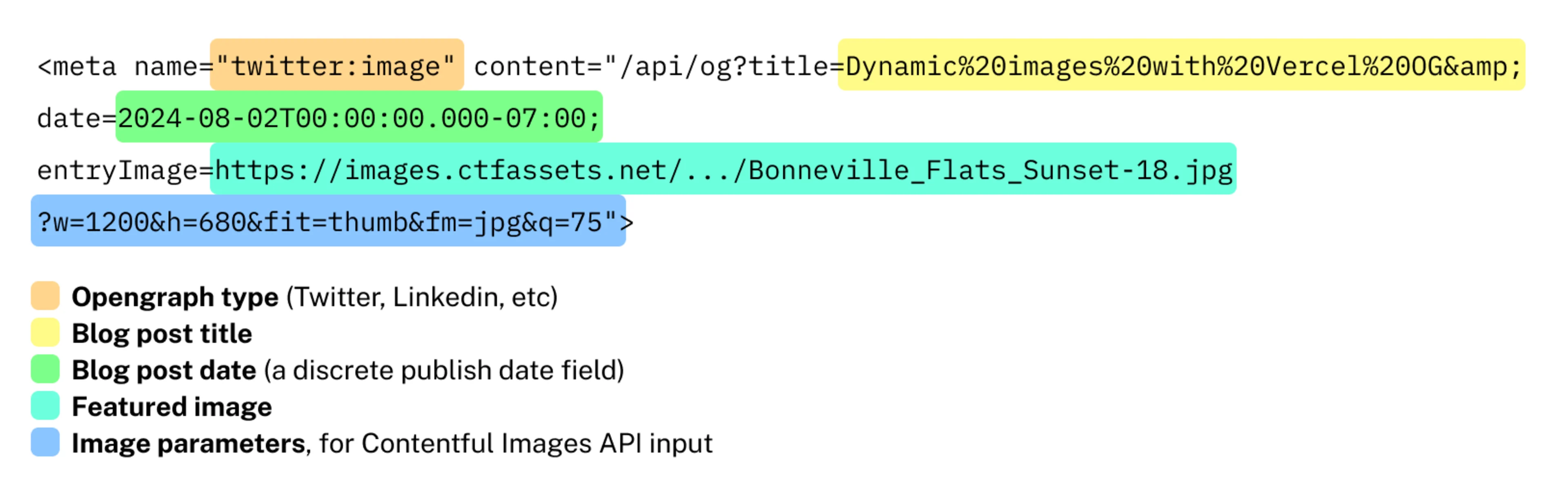

Consume the new OG route into Next's metadata, generating the various meta tags. This takes the template, passes in any text strings, and renders the image.

There was a bit of trial and error, but I ended up with output like this, broken down here:

Potential Applications

While this is optimized for opengraph images and well-integrated into the Next stack, there are applications beyond opengraph previews. Anything that needs to be a bitmap, and not just an HTML layout:

Event tickets as Vercel has shown in the past

Media for other channels such as video, email, and social art. Bannerbear has a fantastic inspiration page with template examples using their product (which has a nice UI, but similar compositing limitations so the tech may be similar). I ran a hackathon project that built a Contentful integration you can watch here.

Video poster images on the fly, perhaps derived from frames and then having a template applied

Media that needs further transformation or compositing downstream. Note that Satori can output SVG as well — which won't work for Opengraph images, but could be useful for other artwork.

I was able to build this prototype based off the Next + Contentful starter in a couple days. There still are reasons to use Chromium's Puppeteer for some tasks, but performance at scale has been a problem in the past. While Satori is a bit of a weird subset of HTML, and in some respects more like SVG layout and composition, it does offer a lot of power as implemented with Vercel OG.